This is the experimead that started it all. The experiment tested 12 yeast strains in short meads (8.5% ABV). The meads have been evaluated using triangle tests and scoresheets by experienced home brewers and BJCP certified judges at Canadian home brewers clubs across Canada.

The experiment will test various yeast strains in their use in short meads (8.5% ABV- shortish). The meads will be evaluated using triangle tests and BJCP-type scoresheets by experienced home brewers and BJCP certified judges at Canadian home brewers clubs. Kingston (KABOB), Toronto, (GTAbrews+professional brewers), Vancouver (VanBrewers), and Ottawa (AJ+crew from GotMead?) all participated in the experiment.

This experiment has two main goals:

- Test body of various yeast strains for improved mouth feel.

- Test ester profiles of various yeast strains for improved taste and aromatics.

Side goals:

- Do triangle tests for “identical” yeasts (US-05 / WLP001/ WY1056, etc)

- Find meads that are clean and fast (test all meads before 8 weeks)

Experiment yeasts and their use in previous tests

Dry Ale Yeasts:

- US-05 (used by Golden coast meads, Colony Meadery, etc)

- Lallemand Abbaye (dBOMM 2015)

- CBC-1 (dBOMM 2015)

- Safbrew T-58

Ale Yeasts:

- WLP001 (BB 2016)

- WLP002 (BB 2016)

- WLP041 (BB 2016)

- WY1056 (BBR 2007)

- WY1388 (BBR 2007; BB 2016, BOMM 2015, dBOMM 2015)

Dry Wine Yeasts:

- 71B-1122 (BB 2016 control, commercial standard)

- D-47 (http://www.groennfell.com/recipes)

- EC-1118

References of the yeasts in previous experiments:

- Basic Brewing Radio (BBR), 8 November 2007, Beer Yeast Mead

- Billy Beltz (BB), 28 May 2016, The Great Ale Yeast Mead Experiment – Making Mead With Ale Yeast

- Denard Brewing (dBOMM), November 16 2015, Dry Yeast BOMM and Aromatic Mead Experiment

- Denard Brewing (BOMM), 8 March 2015, Ale Yeast and Belgian Ale Yeast Experiment – The birth of the BOMM

Experiment Parameters:

- Batch size 1 gallon

- Target OG: 1.064

- Target FG: 0.996-1.000

- Target ABV: 8.4-8.5%

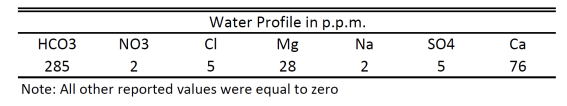

- Water profile – see table

- Honey: 1.52lbs, Using Hogan’s white honey. Clover, alfalfa, etc.

- 0.5 grams, approx. 1/8 tsp KHCO3

- YAN Provided: 175 (excluding Go-ferm)

- Go-ferm: 12.5g (~525 YAN, with effectiveness of 3)

- Fermaid-O : 3.5g (148 YAN, with effectiveness of 4)

- Fermaid-K: 1g (27 YAN)

- Dry Yeast Weight: 10-11.5g yeast

Procedure:

- Rehydrated/ add yeast in Go-ferm. Added 1 tbsp of must every 30 minutes till reached 500ml after about three hours. All starters were bubbling away at time of pitch except for the 041, 1388 and 002. For Wyeast smack packs, smacked and added half of the go-ferm at 2 hours.

- Fermented in 4 liter plastic spring water jugs, leaving 500ml for air at top. Pitched Fermaid-O and KHCO3 at time 0. Aerated worts by shaking for 1 minute at time 0 and 30 seconds at 24 hours.

- Fermentation temperature: 65-68f

- Added Fermaid-K after 24h.

- Degassed all jugs by swirling for 1 minute at 12, 36, 48, 72 hours and at 1 week.

- Cold crash at 3 weeks for 3 days, added clarifier, waited to precipitate out, then bottled.

- Carbonate using carbonation tabs to 2.3 vol.

- Do triangle tests and judging within 6-8 weeks.

Experiment Execution and Initial Impressions

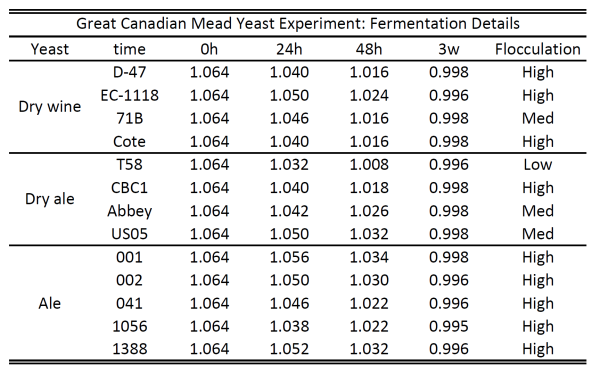

The wine yeasts went to town immediately and fermented much faster than the ale yeasts. The ale yeasts were also much more likely to foam up. During degassing, 1388, US-05, 001, and 1056 had lots of foam. Here are the details of the fermentation speed and floculation by yeast type:

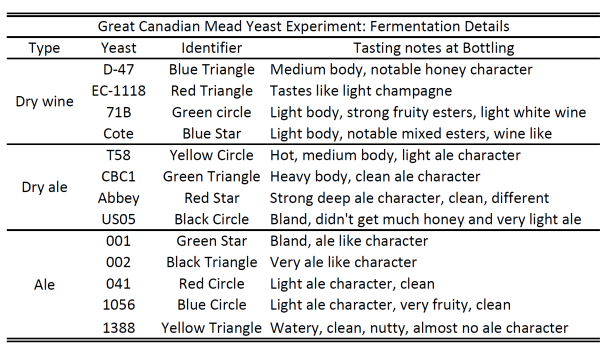

Upon bottling, I tasted all the meads and made some notes. There was a notable off-flavor from the nutrient present in some of the meads and I was hoping that the priming and some extra cold crashing would take care of most of that.

One of the interesting features, was the ale like flavor that came through on many of the ale yeasts. It tastes like a lager would smell if you let it go warm and sit out. Not a fruity ester, or particularly enjoyable. Interestingly, I found 001 to be slightly cleaner than US-05, and 1056 had noticeable fruity character. That character seemed to subside by the time of the triangle tests.

Another interesting feature was that Wyeast was noticeably darker than the meads made with the other yeast sources. Meads made with Wyeast smack packs are not gluten-free since they use beer wort to activate. This was true of the 1388 and 1056:

1056 vs US-05

Getting to the experiment and evidence.

Experiment Evaluation

Kingston (KABOB), Toronto, (GTAbrews+professional brewers), Vancouver (VanBrewers), and Ottawa (AJ+crew from GotMead?) all participated in the evaluations. The participants included mostly BJCP certified beer and mead judges. There were also experienced home brewers and two professional brewers. All triangle tests were preformed at two months from pitch, plus of minus a week. Participants performed triangle tests for pairs of meads. The triangle tests recorded whether they got the answer correct and which they preferred. Then all participants were asked to fill out a score sheet.

Here were the instructions given to the organizer and sheet to record the triangle tests (summarized below): Instructions

Instructions:

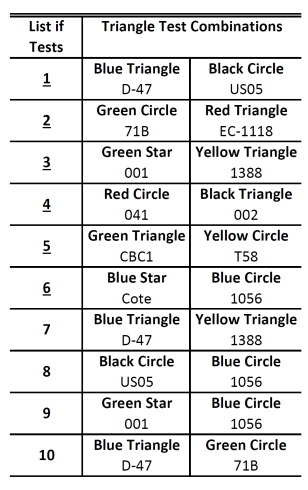

Begin by completing triangle tests # 1-6 in any order. I there was time/mead left over complete the optional triangle tests # 7+.

Pour approximately ½ to 1 ½ ounces per opaque cup (Red solo cups used). Pour the whole bottle into cups in one go and avoid the yeast at bottom by keeping at least 2 ounces in bottle (use leftovers for the optional tests). Place the appropriate sticker on the bottom of the cups. Give each participant three cups (two of one, one of the other; giving half two of one and vice versa maximizes participants.). Ask participants to identify the odd one out.

Record if they get it correct and which they prefer. Use the correctly identified column to record how many people correctly or incorrectly identified the meads. Use the preferred meads column to record the preferred meads of all participants. Put a bar for those who correctly identified the meads, and an asterix for those that did not.

See the first row for an example consisting of six people participating in a mock triangle test. Four correctly identified, 2 did not, and half preferred each, but the two who incorrectly identified preferred the right column.

Then ask them to complete the survey. Make sure they indicate which they preferred and if they got the triangle test correct on the left margin of the survey for each pair.

Once all tasting are complete you are free to open the “answers” envelope. Please return all score sheets and the triangle tests results by mail. Participants are welcome to take pictures if they want to record their preferences.

Picking which meads to pair off was hard. I mainly use D-47 and US-05 for short meads so was really interested in potential improvements. I was also really interested in comparing yeasts used for commercial purposes. Here is a summary of the triangle tests:

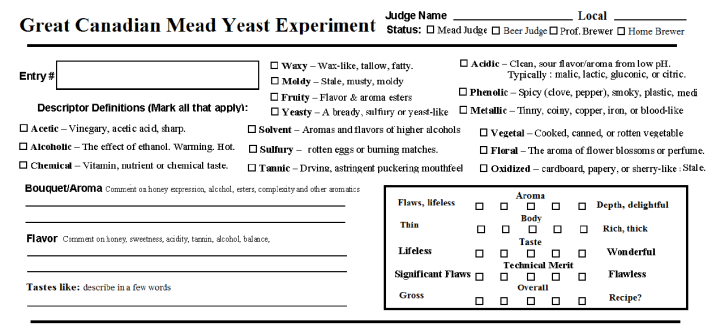

Here is an example of the ScoreSheet, which is summarized by the picture below:

Triangle Tests

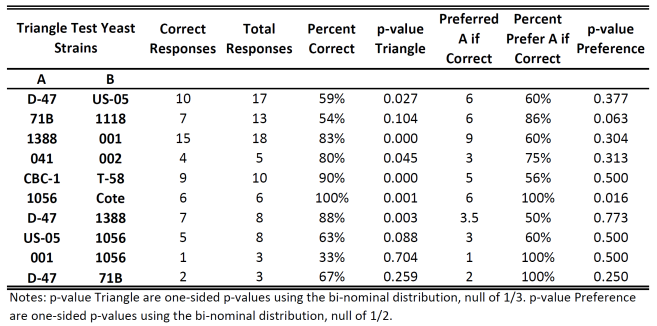

The results of the triangle tests are summarized in the table below. Not all home brew clubs conducted the same tests and the number of participants differed by home brew club, so the number of participants differ by triangle tests. Overall, participants were able to significantly identify the meads in 6 of the 10 triangle tests using a significance of 0.05. Two of the four that were not significant only had three participants, and the other two had p-values close to or under 0.1. Of those who correctly identified the meads, two has significant preferences. The p-values were calculated from a one-sided tests using the bi-nominal distribution with a null of 1/3, the same as used by brulosophy.com. The p-value calculator came onbrewing.com and can be found using the web link. Lets consider some triangle tests of interest.

D-47 and US-05 are the main yeasts used by most commercial meaderies for short meads. I interchange these yeasts in my own home brewing of short meads depending on the character I am looking for. Out of 17 participants, 10 were able to correctly identify the odd mead out. With a p-value of 0.027, this suggests that this was not merely a random choice. Out of the 10 that correctly identified the meads, 60 percent preferred the D-47. This does not significantly differ from random choice of preference. Overall, this suggests that most people can tell then apart, but the participants are more or less split on preference.

1388 and 001 are two ale yeasts that were able to be significantly distinguished. Out of 18 participants, 15 were able to correctly identify the odd mead out, with a corresponding p-value of 0.000. 60 percent of those who correctly identified preferred 1388 over 001. This does not significantly differ from random choice of preference. Similarly 7 of 8 participants were able to distinguish 1388 to D-47, but participants were spilt on preference. One participant was indifferent, hence the 0.5.

All 6 participants correctly identified and preferred 1056 to Cote. 5 out of 8 could distinguish US-05 to 1056, with a p-value of 0.088, but just 3 out of the 5 preferred US-05 to 1056.

71b was close to significantly identified from 1118 by 7 out of 13 participants (p-value=0.104). Of the seven that correctly identified, 6 preferred 71b to 1118 (p-value 0.063).

Overall, the results from the triangle test suggest that a lot more meads were able to be distinguished than in most beer triangle tests from http://brulosophy.com/. However, of those that correctly identified the meads, most were split on preferences. Interestingly, those with BJCP mead certification, myself, and AJ from Ottawa has nearly 100 percent correct responses. While I cannot provide an exact breakdown, since I did not record who got it correct for everyone, there was a clear divide. This may be due to experienced Mazers knowing common mead flavors and the fact that the meads showed their quality quite clearly due to the white honey and the dryness of the meads.

Participants Tasting Notes

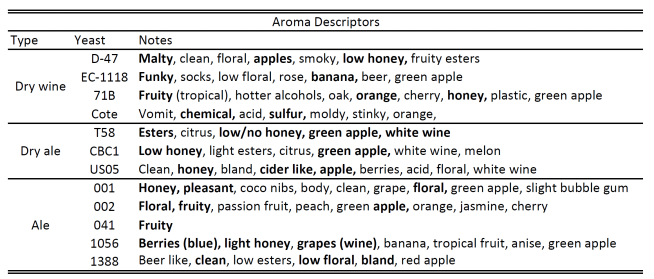

The following tables describe the aroma and flavor notes from the participants. Bold letters indicate descriptors that were used more than once.

Malty was used several times to describe the aroma and flavor of D-47. Apples and cider, with low honey character dominated descriptions of the aroma and flavor.

EC-1118 were described as funky by several participants. Low to no honey character was present. Cote was sour, with sulfur and vomit like character in aroma. Many described Cote as having better flavor but was still perceived to be sour with citrus, and little honey character. 71b was said to have fruity esters for aromas, with a wide array of fruit descriptors, although tropical, orange were commonly perceived. In taste 71b was described as a white wine, with citrus character.

Of the dry ale yeasts, T58 was not very well received. Descriptions of esters, heat, and green apple dominated. CBC1 was quite well received. While honey character was low, there was descriptions of green apple for the aroma and tart, fruity, cider and white wine like character in the taste. US-05 was described as clean, clear honey notes, and very cider like by many participants. This matched the flavor descriptors of cider like, white wine and floral.

Interestingly, US-05 was perceived quite differently than its wet yeast 001 and 1056. 001 had much clearer honey character, described as pleasant and floral in aroma and flavor. 1056 was perceived to be much more fruity. Berries, blueberry, light honey and grape dominated the descriptors of 1056. The honey character was clearer for 1056 in flavor with some green apple, and a balanced amount of acid.

002 was described as fruity, floral with apple in the aroma. The flavor of 002 has clear honey notes, and found to be fruity, similar to an orange wheat beer character. 041 was evaluated by far less participants, but was described as clean and fruity. Finally, 1388 was described as low floral, beer like and bland by many participants. Similar notes came across in the taste with slight fruit, and white grapes.

Authors notes: I thought the overall quality of the meads were quite low. There was a distinct nutrient character that came across as a strong umami/brine character. This was mainly due to the high level of nutrients that were used. Toronto in particular had it bad since their evaluation was only a few days after having it shipped to them. Due to bottle conditioning, almost all participants from Toronto noted yeast character in their flavor profiles, which was not noted by most other participants.

Participants Overall and Mouthfeel Scores

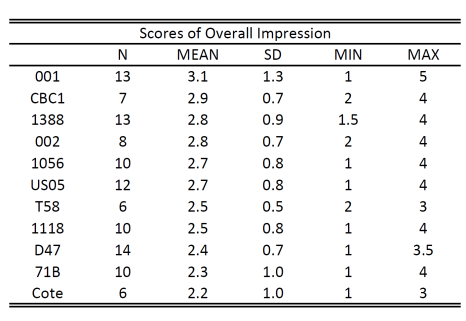

One of the difficulties of having a large number of yeasts to evaluate in triangle tests is having a picture of overall ranking. After all triangle tests, participants were asked to fill out the score sheets which contained five boxes for the quality of the overall impression and mouthfeel. To provide a picture of overall ranking, these boxes were converted into numbers from 1-5 so all of the meads can be compared.

In the score sheet, all participants were asked to rank the meads from “gross” to “recipe?” using five check boxes. Note that the observations differ due to sample, and non-response. Moreover, the sample used is for all responses, (unlike for the preference data in the triangle tests) due to the inability to differentiate those who correctly identified the meads in the score sheets (something I need to add for next time). The perception was asked after each triangle test. Due to this participants may be influenced by the counterpart in the triangle tests. The number of observations, mean, standard deviation, min and max for the meads are reported in the following table.

Interestingly, the overall scores give a different ranking than what may be inferred from some of the data above. 001 was a clear winner with 3.1, followed by CBC1 with 2.9. 1388 and 002 tied for third with 2.8. Both US-05 and 1056 scored 2.7. The white wine yeasts were all close to the bottom of the ranking with 71b and Cote being quite low.

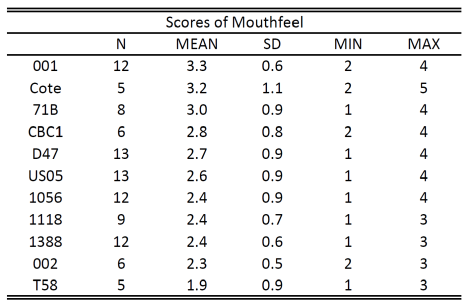

For mouthfeel, all participants were asked to rank the meads from “thin” to “rich-thick” using five check boxes. Converting these check boxes into numbers from 1-5, the meads can be compared by overall mouthfeel. The number of observations, mean, standard deviation, min and max for the meads are reported in the following table.

Interestingly, 001 come out on top for mouthfeel with an overall score of 3.3. Despite Cote being scored last in overall score it came second in mouthfeel with 3.2, although the sample size was lower. D-47 and 1056 has scores of 2.7 and 2.6, respectively. 1056, 1118, 1388 all came in lower at 2.4, followed closely by 002 with 2.3. T58 scored the lowest with 1.9 but again had a small sample size.

To summarize, 001 and CBC1 came in quite high in overall impression and mouthfeel. Much of the mouthfeel would be coming from the carbonation and sensations of thickness in the meads. 1056 and US-05 came in the mid-range for overall score mouthfeel. The meads were made with a light honey and at 8.5 percent, designed to quite bland to be able to better differentiate the meads. Hence were not really expected to produce significant honey flavor or mouthfeel. Even in the presence of nutrient off-flavors, many of the meads did quite well and ranked consistency across mouthfeel and overall impression.

Discussion of Experiment Design and Execution

The execution of the experiment lacked in a few regards.

The nutrient level was designed to be high (175 YAN), but ended up being much too high. All meads ended up suffering from nutrient/mineral off flavors. This is my biggest regret in hindsight. I attribute this to two reasons. First, the traditional advice is to calculate the YAN using Fermaid- K, Fermaid-O or DAP. If the yeast are rehydrated in Go-ferm you can ignore its YAN contribution. However, given the pitch rate in this experiment, the Go-ferm ended up providing most of the YAN (525 YAN). This is an extreme example of why the YAN contribution of Go-ferm should be taken into account (see my article on TANG, a revised nutrient schedule for short meads).

Despite my best intentions, the pitch rate differed by strain. I tried to match the grams of yeast based on a pack of US-05 which was 11.5 grams. Only after the fact I realized that the yeast cells in Wyeast and White labs are less than half the yeast cells than for a pack of dry yeast. Oops! This may be compounded by the fact that the viability of the wet yeasts may be different, with likely less nutrient storage. I based the high pitch rate on the assumption that you cannot over pitch yeast, but it turns out you can if you use Go-ferm at 1.25g per gram of yeast. In retrospect, I should have pitched half of the packet of US-05 and stuck with my use of only a pack of dry wine yeast. In particular, I wonder if the low yeast cells in 001 helped clean up some of the nutrient off flavors compared to the other strains.

There is a lack of evidence on the appropriate water profile for short meads. However myself, and other authors such as Bray Denard have noticed that this has an effect on the overall quality of the meads. In other batches that I have used the same water profile, I found that there was a mineral quality to the short meads that took some time to come out. I have used soft tap water and distilled water in many of my short mead batches and have found that the Ca level makes a difference. Now I use TANG, a revised nutrient schedule for short meads that takes into account the starting water profile. An experiment on water quality is already underway.

While effort was placed to have the meads evaluated early, I have noticed from my own experiments that bottle conditioning versus force carbonating makes a big difference. For example, I made a sparkling coffee maple mead that scored 36 and 40/50 at the VanBrewers competition, but only scored in the high 20s – low 30s when I sent it to two other competitions. The difference was that I force carbonated the mead for the VanBrewers competition but bottle carbonated the mead for the other competitions. Moreover, in my experience, bottle conditioning can really change the mead, which can take at least three months to really turn around for the better. If I was to repeat this experiment again, I would have evaluated the meads after at least three months, or force carbonated the meads. Bottle conditioning also increases the risk of mishandling as the shipping of the meads created notable off flavors for Toronto in particular that evaluated the meads quickly after shipping.

Conclusions

Overall, I found these results to be quite surprising. 001 was a clear winner in overall score but 60 percent of participants preferred 1388 to 001. The tasting notes from the California ale strains (001, US-05 and 1056) differed quite a bit. The wine yeasts did quite poorly in overall score, even though D-47 had 60 percent of the correct responders in the triangle tests prefer it over US-05.

It also surprised me how much these meads changed over time. At bottling many of the ale yeasts tasted like beer and had a lager like quality to them. However, when evaluated after two months many of this beer character had cleaned up, and there was much more mead – cider quality to them. Over time the nutrient off-flavor also dissipated, even though there was still a slight umami flavor to them.

I also realized that it is a lot more work to have more than two meads to evaluate. It is a lot easier to only evaluate two meads in a triangle tests. However, it then becomes very difficult to account for more than two different meads. This experiment has raised lots of questions for me. I am planning future experiments in nutrients, water profiles, bottle priming sugars, sours etc. Stay tuned for more experimeads!

I want to thank all those that participated in the experiments. A special thanks to the members of KABOB, GTAbrews, VanBrewers and AJ from GotMead for all the work it took to run the tastings. Also a special thanks to AJ and and Bray Denard for the referee reports. Thank you kindly.

Peer Review

Reviewer 1, Bray Denard https://denardbrewing.com/

Authors response to Reviewer 1

Thanks for the feedback. Excellent points. Let me first address your questions.

Reviewer 2, A.J. Ermenc, GotMead?

http://gotmead.com/blog/gotmead-live-radio-show/

I was happy to be a part of the Great Canadian Yeast Experiment. I had never participated in triangle tests before and was pleased to confirm that my own senses are actually sensitive enough to pick up on some subtle differences, even if my lack of experience with tasting was less able to describe the differences I perceived. I was surprised how different these batches came out tasting and smelling, just because of changing the yeast. I think this kind of experiment is something each meadmaker, winemaker and beer brewer should try on their own or with a group at least once, even if just to see for one’s self the yeast differences we all read about. I definitely see the value of testing like this with specific honeys, fruits, and combinations to dial in what is best for each batch and the process would definitely be of value for anyone making meads and wines commercially.

Authors response to Reviewer 2

Thanks A.J.! It was also fun to organize the tests. I agree that every mead maker could learn something by splitting a batch and trying out different yeast. I encourage every mead maker to do it at least once on a favorite recipe. For the record, I would like to mention that A.J. got every triangle test correct! She also had great descriptors for each of the meads and was one of the first persons to mention D-47 as malty.

Loved the experiment. I’ve had an interest in a few of those liquid ale yeasts for mead. I’ll have to get ahold of some WLP 001. Thanks for the great work

LikeLike

Kind of amazed there aren’t more comments on this. For what it’s worth, this kind of work is invaluable; you and those who spent their time organizing and compiling deserve some serious thanks. Yeah it isn’t perfect, yeah it’s missing variables, yeah yeah yeah — it’s a solid design and tons were learned. *gives the horns*

LikeLike